Examining the Perceived Performance of Artificial Intelligence on the Behavioral Front

Abstract

Abstract Views: 0

Abstract Views: 0

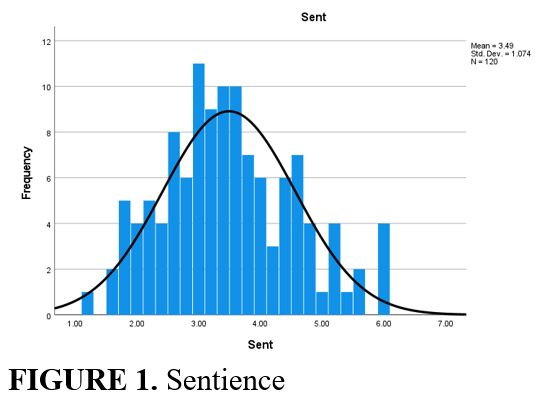

Artificial intelligence (AI) and its associated technologies have experienced rapid advancements, especially in the 21st century. While the proficiency of knowledge-based AI is well-established, behavior-based AI still faces significant challenges. There exists uncertainty about the effectiveness of AI systems in performing behavioral roles, that typically belong to human beings. Based on AI-driven social theory, this research argues that the development of AI systems is closely intertwined with social facets. Since the foundation of all AI technologies is ingrained in anthropocentrism, the study inquires about the confidence of users/respondents in AI’s ability to assume behavioral roles. For empirical analysis, data was collected from 120 university students. Rudimentary scales were designed to gauge the influence of AI across eight behavioral parameters, namely sentience, personality, leadership, ethics, decision-making, power, conflict management, and emotions. Descriptive data analysis revealed somewhat vacillating results. Among the eight behavioral parameters, respondents showed high confidence in AI’s decision-making capabilities. Moreover, the results revealed respondents’ moderate confidence in AI’s ability to exercise power and manage conflicts. Conversely, confidence in AI’s emotional prowess is found to be relatively low. It is further found that females believe more in the prospect of AI sentience relative to males. No significant difference between male and female perceptions was found for the rest of the parameters. The study's indeterminate findings concluded that users are confident, as well as ambivalent of behavioral AI’s perceived performance. The middle-of-the-road results suggested skepticism around AI’s behavioral capabilities.

Downloads

References

I.-A. Chounta, E. Bardone, A. Raudsep, and M. Pedaste, “Exploring teachers’ perceptions of artificial intelligence as a tool to support their practice in Estonian K-12 education,” Int. J. Art. Intell. Edu., vol. 32, pp. 725–755, June 2022, doi: https://doi.org/10.1007/ s40593-021-00243-5.

A. Aggarwal, C.C. Tam, D. Wu, X. Li, and S. Qiao, “Artificial intelligence–based chatbots for promoting health behavioral changes: Systematic review,” J. Med. Inter. Res., vol 25, Art. no. e40789, Feb. 2023, doi: https://www.jmir.org/2023/1/e40789.

S. Castagno and M. Khalifa, “Perceptions of artificial intelligence among healthcare staff: A qualitative survey study,” Front. Art. Intell., vol. 3, Art. no. 578983, Oct. 2020, doi: https://doi.org/10.3389/frai.2020.578983.

M-C. Lai, M. Brian, and M.-F. Mamzer, “Perceptions of artificial intelligence in healthcare: Findings from a qualitative survey study among actors in France,” J. Transl. Med., vol. 18, no. 14, Jan. 2020, doi: https://doi.org /10.1186/s12967-019-02204-y.

G. K.-M. Liu, “Perspectives on the social impacts of reinforcement learning with human feedback,” Comput. Soc., March 2023. https://doi. org/10.48550/arXiv.2303.02891.

J. McGuire and D. De Cremer, “Algorithms, leadership, and morality: Why a mere human effect drives the preference for human over algorithmic leadership,” AI Eth., vol. 3, pp. 601–618, July 2022, doi: https://doi. org/10.1007/s43681-022-00192-2.

B. Beets, T. P. Newman, E. L. Howell, L. Bao, and S. Yang, “Surveying public perceptions of artificial intelligence in health care in the United States: Systematic review,” J. Med. Inter. Res., vol. 25, Art. no. 40337, Apr. 2023, doi: https://www.jmir.org /2023/1/e40337.

J. Mökander and R. Schroeder, “AI and social theory,” AI Soc., vol. 37, pp. 1337–1351, May 2022, doi: https://doi.org/10.1007/s00146-021-01222-z.

G. Marcus, “The next decade in AI: Four steps towards robust artificial intelligence,” Artif. Intell., Feb. 2020, doi: http://arxiv.org/abs/2002.06177.

M. Anandarajan, “Profiling web usage in the workplace: A behavior-based artificial intelligence approach,” J. Manag. Info. Syst., vol. 19, no. 1, pp. 243–266, June 2002, doi: https://doi.org/10.1080/07421222.2002.11045711.

P. M. Aonghusa and S. Michie, “Artificial intelligence and behavioral science through the looking glass: Challenges for real-world application,” Ann. Behav. Med., vol. 54, no. 12, pp. 942–947, Dec. 2020, doi: https://doi.org/10.1093/abm/kaaa095.

M. Mitchell, “Why AI is harder than we think,” Artif. Intell., April 2021, doi: https://doi.org/10.48550/arXiv. 2104.12871.

A. Zador et al., “Catalyzing next-generation artificial intelligence through NeuroAI,” Nat. Commun., vol. 14, Art. no. 1597, Mar. 2023, doi: https://doi.org/10.1038/s41467-023-37180-x.

G. Zhang, A. Jain, I. Hwang, S.-H. Sun, and J. J. Lim, “Efficient multi-task reinforcement learning via selective behavior sharing,” Mach. Learn., Feb. 2023, doi: https://doi.org/ 10.48550/arXiv.2302.00671.

D. Estrada, “AIdeal: Sentience and ideology,” J. Soc. Comput., vol. 4, issue. 4, pp. 275–325, Dec. 2023, doi: https://doi.org/10.23919/JSC.2023.0029.

E. Schwitzgebel, “AI systems must not confuse users about their sentience or moral status,” Patterns, vol. 4, no. 8, Aug. 2023, doi: https://doi.org/10. 1016/j.patter.2023.100818.

H. Nayuf, “Artificial intelligence and the issues of creation, sentience, and consciousness: A teo-ethnographic perspective,” in 3rd Int. Conf. Human. Edu. Law Soc. Sci., Jan. 2024, pp. 1229–1237, doi: https://doi.org/10. 18502/kss.v9i2.14938.

R. Nath and V. Sahu, “The problem of machine ethics in artificial intelligence,” AI Soc., vol. 35, pp. 103–111, Mar. 2020, doi: https://doi.org/ 10.1007/s00146-017-0768-6.

D. Chalmers. Reality+: Virtual worlds and the problems of philosophy. Penguin UK.

S. Lavelle, “The machine with a human face: From artificial intelligence to artificial sentience,” in CAiSE 2020 Workshops, LNBIP 382, S. Dupuy-Chessa and H. A. Proper, Eds., Springer, 2020, pp. 63–75, doi: https://doi.org/10.1007/978-3-030-49165-9_6.

M. Dorobantu and F. Watts, “Spiritual and artificial intelligence,” in Perspectives on Spiritual Intelligence, M. Dorobantu and F. Watts, Eds., Routledge, 2024.

M. Gibert and D. Martin, “In search of the moral status of AI: Why sentience is a strong argument,” AI Soc., vol. 37, pp. 319–330, Apr. 2022, doi: https://doi.org/10.1007/s00146-021-01179-z.

T. X. Short, “Designing stronger AI personalities,” in Work. Thirteenth AAAI Conf. Artif. Intell. Interact. Digi. Entertain., vol. 13, no. 2, Oct. 2017, doi: https://doi.org/10.1609/aiide. v13i2.12973.

C. H. T. Child and J. Georgeson. NPCs as people, too: The extreme AI personality engine. City University of London, 2016.

M. Černák and O. Lianoudakis, “The tower: Design of distinct AI personalities in stealth-based games”, Master thesis, Dep. Comput. Sci. Engi., Chalm. Univ. Technol., Sweden, 2020. [Online]. Available: https://gupea.ub.gu.se/handle/2077/67949

J. Wang and K.V. Nhan, “Personality AI development,” Bachelor thesis, Worcester Polytech. Inst. Dig. WPI, Major Qualify. Projec., Ritsum. Uni., Kyoto, Japan, 2018, https://digitalcommons.wpi.edu/mqp-all/6702.

S. Mennicken, O. Zihler, F. Juldaschewa, V. Molnar, D. Aggeler, and E. M. Huang, ““It’s like living with a friendly stranger”: Perceptions of personality traits in a smart home,” in UBICOMP '16 Proc. 2016 ACM Int. Joint Conf. Pervas. Ubiquit. Comput., pp. 120–131, Sep. 2016, doi: http://dx.doi.org/10.1145/2971648.2971757.

N. Lessio and A. Morris, “Toward design archetypes for conversational agent personality,” presented at the IEEE Int. Conf. Syst. Man Cyber., Toronto, Oct. 11–14, 2020.

P. Čerka, J. Grigiene, and G. Sirbikyte, “Is it possible to grant legal personality to artificial intelligence software systems?” Comput. Law Secur. Rev., vol. 33, pp. 685–699, Oct. 2017, doi: http://dx.doi.org/10.1016/j.clsr.2017.03.022.

P. Feshchenko, “Algorithmic management & algorithmic leadership: A systematic literature review,” Master thesis, School Bus. Econom., Jyväskylä Univ., 2021.

A. C. Jackson, E. Bevacqua, P. D. Loor, and R. Querrec, “Modelling an embodied conversational agent for remote and isolated caregivers on leadership styles,” in IVA’19 Proc. 19th ACM Int. Conf. Intell. Virtual Agents, July 2–5, 2019, pp. 256–259, doi: https://doi.org/10.1145/3308532. 3329411.

T. Vreede, L. Steele, G-J. Vreede, and R. Briggs, “LeadLets: Towards a pattern language for leadership development of human and AI agents,” in Proc. 53rd Hawaii Int. Conf. Syst. Sci., pp. 683–693, Jan. 2020.

D. C. Derrick and J. S. Elson, “Automated leadership: Influence from embodied agents,” in HCI in Business, Government, and Organizations, F.H. Nah and B. Xiao, Eds., Springer, 2018, pp. 51–66, doi: https://doi.org/10.1007/978-3-319-91716-0_5.

K. S. Haring, et al., “Robot authority in human-robot teaming: Effects of human-likeness and physical embodiment on compliance,” Front. Psychol., vol. 12, Art. no. 625713, May 2021, doi: https://doi.org/10. 3389/fpsyg.2021.625713.

A. M. Smith and M. Green, “Artificial intelligence and the role of leadership,” J. Leader. Stud., vol. 12, no. 3, pp. 85–87, Dec. 2018, doi: https://doi.org/10. 1002/jls.21605.

G. J. Watson, K. C. Desouza, V. M. Ribiere, and J. Lindic, “Will AI ever sit at the C-suite table? The future of senior leadership,” Bus. Horiz., vol. 64, no. 4, pp. 465–474, July 2021, doi: https://doi.org/10.1016/j.bushor.2021.02.011.

N. Spatola and K. F. Macdorman, “Why real citizens would turn to artificial leaders,” Dig. Gov. Res. Prac., vol. 2, no. 3, July 2021, doi: https://doi.org/10.1145/3447954.

T. Titareva, “Leadership in an artificial intelligence era,” presented at the Leading Change Virt. Conf. James Madi. Univ., Feb. 2021.

R. Nath and R. Manna, “From posthumanism to ethics of artificial intelligence,” AI Soc., vol. 38, pp. 185–196, Sep. 2023, doi: https://doi.org/10 .1007/s00146-021-01274-1.

W. Rodgers, J. M. Murray, A. Stefanidis, W. Y. Degbey, and S.Y. Tarba, “An artificial intelligence algorithmic approach to ethical decision-making in human resource management processes,” Hum. Res. Manag. Rev., vol. 33, no. 1. Mar. 2023, doi: https://doi.org/10.1016/j.hrmr. 2022.100925.

V. C. Müller, “Ethics of artificial intelligence and robotics,” in The Stanford Encyclopedia of Philosophy, E. N. Zalta and U, Nodelman, Eds., Stanford Press, 2020. https://plato.stanford.edu/archives/fa ll2023/entries/ethics-ai/

V. Vakkuri and P. Abrahamsson, “The key concepts of ethics of artificial intelligence - A keyword based systematic mapping study,” presented at IEEE Int. Conf. Eng. Technol. Inn., June 17–20, 2018, doi: http://dx.doi. org/10.1109/ICE.2018.8436265.

H. Yu, Z. Shen, C. Miao, C. Leung, V. R. Lesser, and Q. Yang, “Building ethics into artificial intelligence,” in Proc. 27th Int. Joint Conf. Artf. Intell., 2018, pp. 5527–5533, doi: https://doi.org/10.48550/arXiv.1812.02953.

N. Bostrom and E. Yudkowsky, “The ethics of artificial intelligence,” in The Cambridge Handbook of Artificial Intelligence, K. Frankish and W.M. Ramsey, Eds., Cambridge University Press, 2014, pp. 316–334.

P. Solanki, J. Grundy, and W. Hussain, “Operationalising ethics in artificial intelligence for healthcare: A framework for AI developers,” AI Eth, vol. 3, pp. 223–240, July 2023, doi: https://doi.org/10.1007/s43681-022-00195-z.

S. D. Baum, “Social choice ethics in artificial intelligence,” AI Soc., vol. 35, pp. 165–176, Sep. 2017, doi: https://doi.org/10.1007/s00146-017-0760-1.

L. Benzinger, F. Ursin, W-T. Balke, T. Kacprowski, and S. Salloch, “Should artificial intelligence be used to support clinical ethical decision making? A systematic review of reasons,” BMC Med. Eth., vol. 24, no. 48, July 2023, doi: https://doi.org/10.1186/s12910-023-00929-6.

A. Etzioni and O. Etzioni, “Incorporating ethics into artificial intelligence,” J. Eth., vol. 21, pp. 403–418, Mar. 2017, doi; https://doi.org /10.1007/s10892-017-9252-2.

J. Swart, “Experiencing algorithms: How young people understand, feel about, and engage with algorithmic news selection on social media,” Soc. Med. Soc., vol. 7, no. 2, pp. 1–11, Apr. 2021, doi: https://doi.org/ 10.1177/20563051211008828.

B. Leichtmann, C. Humer, A. Hinterreiter, M. Streit, and M. Mara, “Effects of explainable artificial intelligence on trust and human behavior in a high-risk decision task,” Comput. Human Behav., vol. 139, Art. no. 107539, Feb. 2023, doi: https://doi.org/10.1016/j.chb.2022.107539.

M. Shin, J. Kim, B. Opheusden, and T. L. Griffiths, “Superhuman artificial intelligence can improve human decision-making by increasing novelty,” Psychol. Cog. Sci., vol. 120, no. 12, Art. no. e2214840120, Mar. 2023, doi: https://doi.org/10.1073/ pnas.2214840120.

J. M. Tien, “Internet of Things, real-time decision making, and artificial intelligence,” Ann. Data Sci., vol. 4, issue 2, pp. 149–178, May 2017, doi: https://doi.org/10.1007/s40745-017-0112-5.

K. Dear, “Artificial intelligence and decision-making,” RUSI J., vol. 164, no. 5–6, pp. 18–25, Nov. 2019. https://doi.org/10.1080/03071847.2019.1693801.

L. Chong, A. Raina, K, Goucher-Lambert, K. Kotovsky, and J. Cagan, “The evolution and impact of human confidence in artificial intelligence and in themselves on AI-assisted decision-making in design,” J. Mech. Des., vol. 145, no. 3, Art. no. 031401, Mar. 2023, doi: https://doi.org/10.1115/1.4055123.

S. S. Sundar, “The MAIN model: a heuristic approach to understanding technology effects on credibility,” in Digital Media, Youth, And Credibility, M. J. Metzger and A.J. Flanagin, Eds., MIT Press, 2008, pp. 73–100.

T. Araujo, N. Helberger, S. Kruikemeier, and C. H. Vreese, “In AI we trust? Perceptions about automated decision-making by artificial intelligence,” AI Soc., vol. 35, pp. 611–623, Jan. 2020, doi: https://doi.org/10.1007/s00146-019-00931-w.

Y. Mirsky et al., “The threat of offensive AI to organizations,” Comput. Secur., vol. 124, Art. no. 103006, Jan. 2023, doi: https://doi.org/10.1016/j.cose.2022.103006.

A. Bécue, I. Praça, and J. Gama, “Artificial intelligence, cyber threats and Industry 4.0: Challenges and opportunities,” Artif. Intell. Rev., vol. 54, pp. 3849–3886, Feb. 2021, doi: https://doi.org/10.1007/s10462-020-09942-2.

F. Federspiel, R. Mitchell, A. Asokan, C. Umana, and D. McCoy, “Threats by artificial intelligence to human health and human existence,” BMJ Glob. Health, vol. 8, no. 5, Art. no. e010435, 2023. https://doi.org/10.1136/bmjgh-2022-010435.

J. Chaudhry, A-S. K. Pathan, M. H. Rehmani, and A. K. Bashir, “Threats to critical infrastructure from AI and human intelligence,” J Supercomput., vol. 74, pp. 4865–4866, Oct. 2018, doi: https://doi.org/10.1007/s11227-018-2614-0.

D. Trusilo, “Autonomous AI systems in conflict: Emergent behavior and its impact on predictability and reliability,” J. Mil. Ethics, vol. 22, issue. 1, pp. 2–17, May 2023, doi: https://doi.org/10.1080/15027570.2023.2213985.

M. Brachman et al., “Reliance and automation for human-AI collaborative data labeling conflict resolution,” Proc. ACM Hum-Comput. Interact., vol. 6, no. CSCW2, Art. no. 321, 2022, doi: https://doi.org/10.1145/3555212.

D. J. Olsher, “New artificial intelligence tools for deep conflict resolution and humanitarian response,” Proc. Eng., vol. 107, pp. 282–292, 2015, doi: https://doi.org/10.1016/j. proeng.2015.06.083.

P. N. Tran, D.-T. Pham, S. K. Goh, S. Alam, and V. Duong, “An interactive conflict solver for learning air traffic conflict resolutions,” J. Aeros. Info. Syst., vol. 17, no. 6, pp. 271–277, Apr. 2020, doi: https://doi.org/10. 2514/1.I010807.

H. Wen, T. Amin, F. Khan, S. Ahmed, S. Imtiaz, and E. Pistikopoulos, “Assessment of situation awareness conflict risk between human and AI in process system operation,” Indust. Eng. Chem. Res., vol. 62, no. 9, pp. 4028–4038, Feb. 2023, doi: https://doi.org/10.1021/acs.iecr.2c04310.

E. Habtemariam, T. Marwala, and M. Lagazio, “Artificial intelligence for conflict management,” in Proc. Int. Joint Conf. Neural Netw., 2005. https://arxiv.org/ftp/arxiv/papers/0705/0705.1209.pdf.

R. Aydoğan, T. Baarslag, and E. Gerding, “Artificial intelligence techniques for conflict resolution,” Group Dec. Negot., vol. 30, pp. 879–883, May 2021, doi: https://doi.org/10.1007/s10726-021-09738-x.

A Hsu and D. Chaudhary, “AI4PCR: Artificial intelligence for practicing conflict resolution,” Comput. Hum. Behav. Artif. Hum., vol. 1, no. 1, Jan. 2023, doi: https://doi.org/10.1016/j. chbah.2023.100002.

M. D. Cooney and J. Sjöberg, “Playful AI prototypes to support creativity and emotions in learning,” in 6th EAI Int. Conf. Des. Learn. Innov., 2021, pp. 129–140.

D. B. Shank, C. Graves, A. Gott, P. Gamez, and S. Rodriguez, “Feeling our way to machine minds: People's emotions when perceiving mind in artificial intelligence,” Comput. Hum. Behav., vol. 98, pp. 256–266, Sep. 2019, doi: https://doi.org/10.1016/j. chb.2019.04.001.

E. Han, D. Yin, and H. Zhang, “Bots with feelings: Should AI agents express positive emotion in customer service?” Info. Syst. Res., vol. 34, issue. 3, pp. 1296–1311, Sep. 2023, dpoi: https://doi.org/10.1287/ isre.2022.1179.

C. M. Melo, P. Carnevale, and J. Gratch, “The effect of expression of anger and happiness in computer agents on negotiations with humans,” in Proc. 10th Int. Conf. Auton. Agents Multi. Syst., Taiwan, 2011, pp. 937–944.

Y. Zhao et al., “AI chatbot responds to emotional cuing,” Res. Squ., May 2023, https://doi.org/10.21203/rs.3.rs-2928607/v1.

Copyright (c) 2024 Saman Javed

This work is licensed under a Creative Commons Attribution 4.0 International License.

UMT-AIR follow an open-access publishing policy and full text of all published articles is available free, immediately upon publication of an issue. The journal’s contents are published and distributed under the terms of the Creative Commons Attribution 4.0 International (CC-BY 4.0) license. Thus, the work submitted to the journal implies that it is original, unpublished work of the authors (neither published previously nor accepted/under consideration for publication elsewhere). On acceptance of a manuscript for publication, a corresponding author on the behalf of all co-authors of the manuscript will sign and submit a completed the Copyright and Author Consent Form.